Many Ethel applications consist of multi-step processes, often including decision points such as determining user intent. Within these workflows, the same tasks occur in different contexts—for example, document ingestion and referencing, retrieval over a course corpus, or statistical analysis of grading outcomes.

EthelFlow turns these recurring tasks into an explicit workflow layer. Core capabilities are implemented as containerized microservices that can be orchestrated in different combinations and orders to build end-to-end pipelines for teaching and learning.

Microservices and Workflows

EthelFlow generalizes recurring functionality into reusable services. Typical microservices include:

- Document ingestion and preprocessing

- Indexing and retrieval over course-specific corpora

- Chat and conversational orchestration

- Image and handwriting processing

- Grading and rubric-based scoring logic

- Analytics, logging, and reporting

Using workflow orchestration tools, these microservices can be assembled into flexible pipelines. Some steps use AI models in the background, while others are purely deterministic—for example computing item response theory statistics or applying risk filters for grading.

Overall Architecture

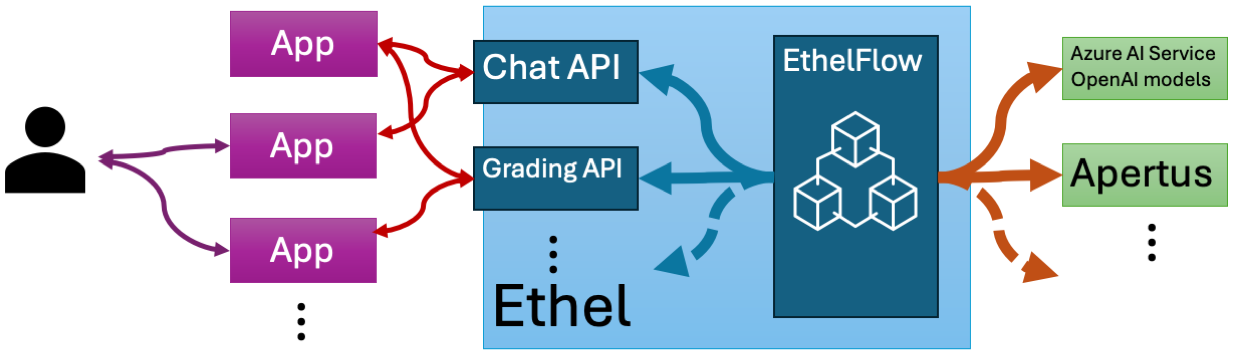

In the broader IT landscape, EthelFlow sits between AI model providers and user-facing applications:

- In the background, many microservices can call either commercial or openly released (“open-weight”) large language models.

- EthelFlow acts as the business logic layer where workflows are defined, decisions are made, and institutional policies are enforced.

- The system exposes several APIs, such as a chat API or a grading API, that other applications can connect to.

- Frontend applications—LMS integrations, exam tools, or standalone web apps—consume these APIs and provide the user-facing interfaces.

Ethel Commons

Ethel itself is open-source freeware, but not all universities can or want to run their own local installation and maintain the required AI infrastructure. Ethel Commons extends EthelFlow into a shared, common infrastructure that institutions can adopt together.

In this model, EthelFlow orchestrates AI workloads across shared infrastructure while still respecting each institution’s data governance and policy requirements. Universities can benefit from a common platform while keeping control over how AI is used in teaching and assessment.

Looking Ahead

As models and tools evolve, EthelFlow’s microservices architecture makes it easier to swap components, add new capabilities, and experiment with alternative providers. This allows the system to remain nimble and pragmatic, adapting quickly to changes in the AI landscape while keeping pedagogy, fairness, and transparency at the center.